Highly doubt it’s worth it in the long run due to electricity costs alone

Depends.

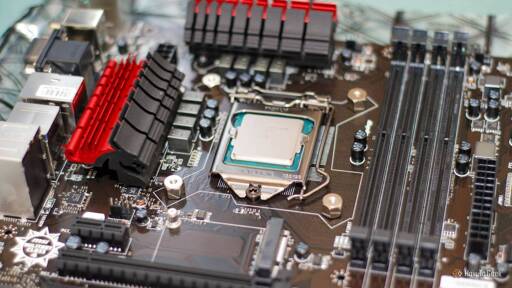

Toss the GPU/wifi, disable audio, throttle the processor a ton, and set the OS to power saving, and old PCs can be shockingly efficient.

You can slow the RAM down too. You don’t need XMP enabled if you’re just using the PC as a NAS. It can be quite power hungry.

Eh, older RAM doesn’t use much. If it runs close to stock voltage, maybe just set it at stock voltage and bump the speed down a notch, then you get a nice task energy gain from the performance boost.

There was a post a while back of someone trying to eek every single watt out of their computer. Disabling XMP and running the ram at the slowest speed possible saved like 3 watts I think. An impressive savings, but at the cost of HORRIBLE CPU performance. But you do actually need at least a little bit of grunt for a nas.

At work we have some of those atom based NASes and the combination of lack of CPU, and horrendous single channel ram speeds makes them absolutely crawl. One HDD on its own performs the same as this raid 10 array.

Yeah.

In general, ‘big’ CPUs have an advantage because they can run at much, much lower clockspeeds than atoms, yet still be way faster. There are a few exceptions, like Ryzen 3000+ (excluding APUs), which idle notoriously hot thanks to the multi-die setup.

Peripherals and IO will do that. Cores pulling 5-6W while IO die pulls 6-10W

https://www.techpowerup.com/review/amd-ryzen-7-5700x/18.html

Same with auto overclocking mobos.

My ASRock sets VSoC to a silly high coltage with EXPO. Set that back down (and fiddle with some other settings/disable the IGP if you can), and it does help a ton.

…But I think AMD’s MCM chips just do idle hotter. My older 4800HS uses dramatically less, even with the IGP on.

And heat your room in the winter!

Add spring + autumn if you live up north.

Stuff designed for much higher peek usage tend to have a lot more waste.

For example, a 400W power source (which is what’s probably in the original PC of your example) will waste more power than a lower wattage on (unless it’s a very expensive one), so in that example of yours it should be replaced by something much smaller.

Even beyond that, everything in there - another example, the motherboard - will have a lot more power leakage than something designed for a low power system (say, an ARM SBC).

Unless it’s a notebook, that old PC will always consume more power than, say, an N100 Mini-PC, much less an ARM based one.

For example, a 400W power source (which is what’s probably in the original PC of your example) will waste more power than a lower wattage on

in my experience power supplies are more efficient near the 50% utilization. be quiet psus have charts about it

The way one designs hardware in is to optimize for the most common usage scenario with enough capacity to account for the peak use scenario (and with some safety margin on top).

(In the case of silent power sources they would also include lower power leakage in the common usage scenario so as to reduce the need for fans, plus in the actual physical circuit design would also include things like airflow and having space for a large slower fan since those are more silent)

However specifically for power sources, if you want to handle more power you have to for example use larger capacitors and switching MOSFETs so that it can handle more current, and those have more leakage hence more baseline losses. Mind you, using more expensive components one can get higher power stuff with less leakage, but that’s not going to happen outside specialist power supplies which are specifically designed for high-peak use AND low baseline power consumption, and I’m not even sure if there’s a genuine use case for such a design that justifies paying the extra cost for high-power low-leakage components.

In summary, whilst theoretically one can design a high-power low-leakage power source, it’s going to cost a lot more because you need better components, and that’s not going to be a generic desktop PC power source.

That said, I since silent PC power sources are designed to produce less heat, which means have less leakage (as power leakage is literally the power turning to heat), even if the with the design having been targetted for the most common usage scenario of that power source (which is not going to be 15W) that would still probably mean better components hence lower baseline leakage, hence they should waste less power if that desktop is repurposed as a NAS. Still won’t beat a dedicated ARM SBC (not even close), but it might end up cheap enough to be worth it if you already have that PC with a silent power source.

All true, yep.

Still, the clocking advantage is there. Stuff like the N100 also optimizes for lower costs, which means higher clocks on smaller silicon. That’s even more dramatic for repurposed laptop hardware, which is much more heavily optimized for its idle state.

So I did this, using a Ryzen 3600, with some light tweaking the base system burns about 40-50W idle. The drives add a lot, 5-10W each, but they would go into any NAS system, so that’s irrelevant. I had to add a GPU because the MB I had wouldn’t POST without one, so that increases the power draw a little, but it’s also necessary for proper Jellyfin transcoding. I recently swapped the GPU for an Intel ARC A310.

By comparison, the previous system I used for this had a low-power, fanless intel celeron, with a single drive and two SSDs it drew about 30W.

Ok, im glad im not the only one that wants a responsive machine for video streaming.

I ran a pi400 with plex for a while. I dont care to save 20W while I wait for the machine to respond after every little scrub of the timeline. I want to have a better experience than Netflix. Thats the point.

Eh, TBH I’d like to consume less power, but I mean, a 30-40W difference isn’t going to ruin me or the planet, I’ve got a rather efficient home all in all.

I have a 3600 in a NAS and it idles at 25w. My mobo luckily runs fine without a GPU. I pulled it out after the initial install.

Literally did this migration this weekend. Still need to install the A310 drivers and I don’t run Jellyfin (streaming handled client side with minidlna or SMB) but how do you find it?

Drivers? Are you running it on Windows? On Linux I just plugged it in and it worked, Jellyfin transparently started transcoding the additional codecs.

It fixed my issue with tone mapping, before this HDR files on my not-so-old TV showed the wrong colors.

I’ve not desktop environment on the NAS, it was plug and play in terminal. I did get an error about HSW/BDW HD-Audio HDMI/DP requiring binding with a gfx driver - but I’ve not yet even bothered to google it.

I read somewhere the sparkle elf I have just ramps the fan to 100% at all times with the Linux driver and has no option to edit fan curve under Linux (suggested fix was install a windows VM, set the curve there and the card will remember, but after rebuilding the NAS and fixing a couple of minor issues to get it all working I couldn’t face installing windows, so just left it as is until I have the time lol).

The host is running Proxmox, so I guess their kernel just works with it.

It does run the fan way more than I’d like, but its noise is drowned out by the original AMD cooler on the CPU anyway, but thanks for the info, I may look into it… But I guess I’d have to set up GPU pass-through on a VM just for that.

Yeah, I can’t say I’ve really noticed the fan noise enough to bother me yet, but wasn’t sure if that’s because I’m running some generic driver or others were just more sensitive to it than me. Jellyfin is at least fourth on the list of maintenance/upgrade tasks at the minute, as long as I can display the terminal at the minute I’m happy enough.

A desktop running a low usage wouldn’t consume much more than a NAS, as long as you drop the video card (which wouldn’t be running anyways).

Take only that extra and you probably have a few years usage before additional electricty costs overrun NAS cost. Where I live that’s around 5 years for an estimated extra 10W.

as long as you drop the video card

As I wrote below, some motherboards won’t POST without a GPU.

Take only that extra and you probably have a few years usage before additional electricty costs overrun NAS cost. Where I live that’s around 5 years for an estimated extra 10W.

Yeah, and what’s more, if one of those appliance-like NASes breaks down, how do you fix it? With a normal PC you just swap out the defective part.

Most modern boards will. Also there’s integrated graphics on basically every single current CPU. Only AMD on AM4 held out on having iGPUs for so damn long.

I’m still running a 480 that doubles as a space heater (I’m not even joking; I increase the load based on ambient temps during winter)

A 486, eh?

I am assuming that’s a GTX 480 and not an RX 480; if so - kudos for not having that thing melt the solder off the heatsink by now! 😅

The GTX 480 is efficient by modern standards. If Nvidia could make a cooler that could handle 600 watts in 2010 you can bet your sweet ass that GPU would have used a lot more power.

Well that and if 1000 watt power supplies were common back then.

If they’re gonna buy a nas anyway, how many years to break even?

I have an old Intel 1440 desktop that runs 24/7 hooked up to a UPS along with a Beelink miniPC, my router, and a POE switch and the UPS is reporting a combined 100w.

OK. Science time. Somewhat arbitrary values used, the point is there is a amortization calculation, you’ll need to calculate your own with accurate input values.

A PC drawing 100W 24/7 uses 877 [email protected] $131.49 per year.

A NAS drawing 25W 24/7 uses 219 [email protected] $32.87 per year

So, in this hypothetical case you “save” about $100/year on power costs running the NAS.

Assuming a capacity equivalent NAS might cost $1200 then you’re better off using the PC you have rather than buying a NAS for 12 years.

This ignores that the heat generated by the devices is desirable in winter so the higher heat output option has additional utility.

Assuming a capacity equivalent NAS might cost $1200

Either you already have drives and could use them in a new NAS or you would have to buy them regardless and shouldn’t include them in the NAS price.

8 drives could go into most computers I think. Even 6 drive NAS can be quite expensive.

That’s not a NAS, that’s a whole-ass PC, though with only 8gb RAM. And way overpriced for the spec.

I bought a two bay Synology for $270, and a 20TB hdd for $260. I did this for multiple reasons. The HDD was on sale so I bought it and kept buying things. Also I couldn’t be buggered to learn everything necessary to set up a homemade NAS. Also also i didn’t have an old PC. My current PC is a Ship of Theseus that I originally bought in 2006.

You’re not wrong about an equivalent NAS to my current pc specs/capacity being more expensive. And yes i did spend $500+ on my NAS And yet I also saved several days worth of study, research, and trial and error by not building my own.

That being said, reducing e-waste by converting old PCs into Jellyfin/Plex streaming machines, NAS devices, or personal servers is a really good idea

This ignores that the heat generated by the devices is desirable in winter so the higher heat output option has additional utility.

But the heat is a negative in the summer. So local climate might tip the scales one way or the other.

In the UK the calculus is quite different, as it’s £0.25/kWh or over double the cost.

Also, an empty Synology 4-bay NAS can be gotten for like £200 second hand. Good enough if you only need file hosting. Mine draws about 10W compared to an old Optiplex that draws around 60W.

With that math using the NAS saves you 1.25 pence per hour. Therefore the NAS pays for itself in around about 2 years.

my gaming pc runs at like 50w idle and only draws a ton of power if its being used for something. It would be more accurate to consider a PC to be 1.75x more power than a NAS but then account for the cost of buying a NAS. I’d say NAS would probably take 2-4 years to pay off depending on regional power prices.

… 100W? Isn’t that like a rally bygone era? CPUs of the past decade can idle at next to nothing (like, there isn’t much difference between an idling i7/i9 and a Pentium from the same era/family).

Or are we taking about arm? (Sry, I don’t know much about them.)

All devices on the computer consume power.

The CPU being the largest in this context. Older processors usually don’t have as aggressive throttling as modern ones for low power scenarios.

Similarly, the “power per watt” of newer processors is incredibly high in comparison, meaning they can operate at much lower power levels while running the same workload.

I think we need to qualify “idling”, my NAS runs bittorrent with thousands of torrents, so it’s never really “idle”, it just isn’t always doing intensive processing such as transcoding.

I got a Kill-A-Watt similar device. I have measured my old PC at around 110W. PC specs: i5-6600, 16gb DDR4 ram, 1060 3gb, 1x2TB hdd, 1x250gb sata ssd, 1x1tb m2 ssd.

I used to think it didn’t matter how electricity is used to generate heat, so I came to the same conclusion you did. Surprisingly, it does matter. Rather than a computer’s resistive heating, it is much more efficient to refrigerate the outdoors and point the refrigerator’s heat sink indoors. This is how a heat pump works. It’s basically awesome.

In the fall/winter in northern areas it’s free! (Money that would already be spent on heating).

Summer is a negative though, as air conditioning needs to keep up. But the additional cost is ~1/3rd the heat output for most ACs (100w of heat require < 30w of refrigeration losses to move)

If your PC has 32gb of RAM or more throw it away (in my trash bin) immediately.

Nah. I dissagree. My dedicated NAS system consumes around 40W idling and is very small sized machine. My old PC would utilize 100W idling and is ATX-sized case. Of course I can use my old PC as a NAS, but these two are different category devices.

I want to reduce wasteful power consumption.

But I also desire ECC for stability and data corruption avoidance, and hardware redundancy for failures (Which have actually happened!!)

Begrudgingly I’m using dell rack mount servers. For the most part they work really well, stupid easy to service, unified remote management, lotssss of room for memory, thick PCIe lane counts, stupid cheap 2nd hand RAM, and stable.

But they waste ~100 watts of power per device though… That stuff ads up, even if we have incredibly cheap power.

I use my old pc server as a 50w continuous heater in my lab-shed which is a small stone outbuilding. Keeps it dry in there!

I bought a used Coffee Lake era Xeon 2224G workstation with 32GB of ECC RAM to use as a NAS. It uses 15 Watts at the wall measured with a killawatt while streaming 4K with Plex.

Nah nah I disagree. My i7 8700k old PC server runs around 60 watts with 4 hard disks and ~30 running containers. It’s a large machine so that I can easily expand with more drives but I can easily buy a smaller mobo on the used market if I wanted something smaller. Depending on how old your NAS is, and what you are doing with it, PC may be more power efficient.

And as usual everyone is saying NAS, but talking about servers with a built in NAS.

I’m not saying you can’t run your services on the same machine as your NAS, I’m just confused why every time there’s a conversation about NASs it’s always about what software it can run.

At this point you’re just fighting semantics. Even a commercial NAS is reliant on the software too, like with Synology. They run the disk management but also can run Docker and VMs with their built-in hypervisor.

If we lose the meaning of the word NAS then we can’t effectively talk about it. And enough new people are coming in and being taught that a NAS is the thing that runs Plex that it’s sometimes impossible to have a conductive conversation.

What in the hell are you talking about

The way I see it, a box of drives still needs something to connect it to your network.

And that something that can only do a basic connection costs only a little less than something that can run a bunch of other stuff too.

You can see why it all gets bundled together.

For me it’s not about what else I can run on it. I want my services separated from my storage devices. If I throw everything into one physical machine it takes everything down if something goes wrong. It’s also harder to do upgrades without needing to replace entire machines

I started my media server in 2020 with an e-wasted i7 3770 dell tower I snagged out of the ewaste pile. Ran jellyfin, audiobookbay, navidrome, calibre-web and an arr stack with about a dozen users like a champ. Old hardware rules if you don’t use windows

Big shout out to Windows 11 and their TPM bullshit.

Was thinking that my wee “Raspberry PI home server” was starting to feel the load a bit too much, and wanted a bit of an upgrade. Local business was throwing out some cute little mini PCs since they couldn’t run Win11. Slap in a spare 16 GB memory module and a much better SSD that I had lying about, and it runs Arch (btw) like an absolute beast. Runs Forgejo, Postgres, DHCP, torrent and file server, active mobile phone backup etc. while sipping 4W of power. Perfect; much better fit than an old desktop keeping the house warm.

Have to think that if you’ve been given a work desktop machine with a ten-year old laptop CPU and 4GB of RAM to run Win10 on, then you’re probably not the most valued person at the company. Ran Ubuntu / GNOME just fine when I checked it at its original specs, tho. Shocking, the amount of e-waste that Microsoft is creating.

Question, what’s the benefit of running a separate DHCP server?

I run openwrt, and the built in server seems fine? Why add complexity?

I’m sure there’s a good reason I’m just curious.

The router provided with our internet contract doesn’t allow you to run your own firmware, so we don’t have anything so flexible as what OpenWRT would provide.

Short answer; in order to Pi-hole all of the advertising servers that we’d be connecting to otherwise. Our mobile phones don’t normally allow us to choose a DNS server, but they will use the network-provided one, so it sorts things out for the whole house in one go.

Long, UK answer: because our internet is being messed with by the government at the moment, and I’d prefer to be confident that the DNS look-ups we receive haven’t been altered. That doesn’t fix everything - it’s a VPN job - but little steps.

The DHCP server provided with the router is so very slow in comparison to running our own locally, as well. Websites we use often are cached, but connecting to something new takes several seconds. Nothing as infuriating as slow internet.

Buy another router that allows you to run openwrt or anything else you fancy, and use the locked-down one just as a gateway to the new one, problem solved. My setup is somewhat similar – locked-down cable modem router that I can’t customize, bought a netgear router, installed freshtomato on it

Oh you mean DNS server, yes ok that makes sense. Yeah I totally understand running your own.

If I understand correctly, DHCP servers just assign local IPs on initial connection, and configure other stuff like pointing devices to the right DNS server, gateway, etc

Sorry, putting the two things together, my mistake. My router doesn’t let you specify the DNS server directly, but it does allow you to specify a different DHCP server, which can then hand out new IPs with a different DNS server specified, as you say. Bit of a house of cards. DHCP server in order to be the DNS server too.

Gotcha! No worries. Networking gets more and more like sorcery the deeper you go.

Networking and printers are my two least favorite computer things.

So on mine, I haven’t bothered to change from the ISP provided router, which is mostly adequate for my needs, except I need to do some DNS shenigans, and so I take over DHCP to specify my DNS server which is beyond the customization provided by the ISP router.

Frankly been thinking of an upgrade because they don’t do NAT loopback and while I currently workaround with different DNS results for local queries, it’s a bit wonky to do that and I’m starting to get WiFi 7 devices and could use an excuse to upgrade to something more in my control.

That makes sense. I haven’t used an ISP configured router in over a decade. At my parents house, their modem/router combo didn’t support bridge mode so I put it in a DMZ and slapped that to the WAN port on my router. Worked well.

True for notebooks. (For years my home NAS was an old Asus EEE PC)

Desktops, on the other hand, tend to consume a lot more power (how bad it is, depends on the generation) - they’re simply not designed to be a quiet device sitting on a corner continuously running a low CPU power demanding task: stuff designed for a lot more demanding tasks will have things like much bigger power sources which are less efficient at low power demand (when something is design to put out 400W, wasting 5 or 10W is no big deal, when it’s designed to put out 15W, wasting 5 or 10W would make it horribly inefficient).

Meanwhile the typical NAS out there is running an ARM processor (which are known for their low power consumption) or at worse a low powered Intel processor such as the N100.

Mind you, the idea of running you own NAS software is great (one can do way more with that than with a proprietary NAS, since its far more flexible) as long as you put it in the right hardware for the job.

I have used laptops like this and I find that eventually the cooling system fails, probably because they aren’t meant to run all the time like a server would be. various brands including Dell and Lenovo and MSI and Apple. maybe it’s the dust in my house. I don’t know

Yeah, different hardware is designed for different use cases and generally won’t work as well for other use cases, which is also why desktops seldom make for great NAS servers (their fans will also fail from constant use, plus their design spec is for much higher power usage so they have a lot more power waste even if trottled down).

That said my ASUS EEE PC lasted a few years on top of a cabinet in my kitchen (which is were the Internet came into my house so the router was also there) with a couple of external HDDs plugged in, and that’s a bit of a hostile environment (because some of the particulates from cooking, including fat, don’t get pulled out and end up accumulating there).

At the moment I just have a Mini-PC on my living room with a couple of external HDDs plugged in that works as NAS, TV Media Box and home server (including wireguard VPN on top of a 1Gbps connection, which at peak is somewhat processor intensive). It’s an N100 and the whole thing has a TDP of 15W so the fan seldom activates. So far that seems to be the best long term solution, plus it’s multiple use unlike a proprietary NAS. It’s the some of the best €140 (not including the HDDs) I’ve ever spent.

How does a notebook—outside of including a DAS—provide meaningful storage volume?

When I had my setup with an ASUS EEE PC I had mobile external HDDs plugged to it via USB.

Since my use case was long-term storage and feeding video files to a Media TV Box, the bandwidth limit of USB 2.0 and using HDDs rather than SDDs was fine. Also back then I had 100Mbps ethernet so that too limited bandwidth.

Even in my current setup where I use a Mini-PC to do the same, I still have the storage be external mobile HDDs and now badwidth limits are 1Gbps ethernet and USB 3.0, which is still fine for my use case.

Because my use case now is long term storage, home file sharing and torrenting, my home network is using the same principles as distributed systems and modern microprocessor architectures: smaller faster data stores with often used data close to were its used (for example fast smaller SDDs with the OS and game executables inside my gaming machine, plus a torrent server inside that same Mini-PC using its internal SDD) and then layered outwards with decreasing speed and increasing size (that same desktop machine has an internal “storage” HDD filled with low use files, and one network hop from it there’s the Mini-PC NAS sharing its external HDDs containing longer term storage files).

The whole thing tries to balance storage costs and with usage needs.

I suppose I could improve performance a bit more by setting up some of the space in the internal SDD in the Mini-PC as a read/write cache for the external HDDs, but so far I haven’t had the patience to do it.

I used to design high performance distributed computing systems and funnilly enough my home setup follows the same design principles (which I had not noticed until thinking about it now as I wrote this).

The main concern with old hardware is probably powerdraw/efficiency, depending on how old your PC is, it might not be the best choice. But remember: companies are getting rid of old hardware fairly quickly, they can be a good choice and might be available for dirt cheap or even free.

I recently replaced my old Synology NAS from 2011 with an old Dell Optiplex 3050 workstation that companies threw away. The system draws almost twice the power (25W) compared to my old synology NAS (which only drew 13W, both with 2 spinning drives), but increase in processing power and flexibility using TrueNAS is very noticable, it allowed me to also replace an old raspberry pi (6W) that only ran pihole.

So overall, my new home-server is close in power draw to the two devices it replaced, but with an immense increase in performance.

My NAS draws about 25w (without drives). Show me an old PC with 6 3.5” drive bays that draws 25w.

At idle or under normal load? Im tempted to get the killawatt out.

Idle. Under load a bit more. It’s a mobile chipset, so it is efficient. Not as powerful as a desktop for sure but totally handles my basic workloads.

Ok. R5 3600, rtx 3070, and 4 spinning drives. Idles at 62W. 80W under normal load (2 concurrent streams). This is a hilariously over specced NAS. This is all 2nd or 3rd life pc parts (outside of the spinning rust), so financially speaking I’m happy with the result.

The long term goal is to use it as a homelab separate from anything I need to work all the time. I want to try running some LLMs locally and use it to control some home automation stuff. That’ll stress it.

Edit: so yeah its double yours.

That’s pretty good though! I kept reading about it how much power video cards draw. I need to get some measurements in under various load with mine. The big part is obviously drives.

I’ve got a 12VDC Mini PC as my NAS/Jellyfin server with 6 SSD’s and no monitor that idles around 30watts and runs entirely off solar. It ain’t fast but it does everything I need it to do and costs zero dollars to run.

Those are good. I got tired of dealing with the unique trouble that comes of having drives attached with a USB JBOD. Also it was just a celeron and kinda melted doen if I ran motion detection with Scrypted.

The Xeon 2224G workstation with 32GB of ECC ram I got on eBay pulls 15 watts from the wall streaming 4k video on Plex.

It didn’t have 6 bays but if I needed it I could move the guts to a bigger case

My main application server is a middling office desktop computer from 2011. Runs dozens of services without a sweat.

NAS, no. Server for my random docker containers or whatever project I’m screwing around with, yes.

I somehow doubt that.

My last desktop PC has been retasked as an HTPC. The CPU in it requires a graphics card for the system to POST, it’s currently mounted in a SFF case with barely room for two 2.5" drives, so it would either make for a shitty, difficult to service, bulky for what it does, power inefficient NAS, or I’d have to buy a new case and CPU.

My current machine is in an mATX mini-tower, there’s room for hard disks and the 7700X has integrated graphics so I could haul the GPU out, but it’s still kind of bulky for what you’d get.

So I’m gonna keep my Synology in service for a little while longer, then build a NAS from scratch selecting components that would be good for that purpose.

This is my rig, I already bought 20 30TB disks, how do I proceed?

Your system is a POS.

First choose a version of linux to install on it, then you can proceed.

Atm it’s running Proxmox, but I’ll go bare metal with a fresh install of Hannah Montana Linux, I want to do things proper this time!

I operate my hard drives totally external to my old PC’s case with a 3D printed holder keeping them together (with a little space between each drive for ventilation). It’s a little ugly, but it lives in a closet so I don’t really care how it looks. More importantly with my old Neatgear NAS I didn’t realize just how much speed I was missing out on. I guess with a modern Synology unit with a SSD cache you’ll likely get similar performance, but it’s so convenient to be able to run Docker containers and VMs on the same machine.

My UnRAID server is an HP desktop machine from 2011. More than capable of running dozens of services without tons of storage.

The number one concern with a NAS is the power draw. I can’t think of many systems that run under 30W.

How about a Raspberry Pi? I’ve got one (Raspberry Pi 400) running my Home Automation setup with a couple USB 3.0 ports. Was thinking there’s gotta be some add-ons for Home Assistant to put some external storage to good use.

Don’t need anything too fancy. Just looking for some on-site backup and maybe some media storage

For backups it will be fine. Same for media storage. But if you want media streaming from the device (like plex) then you’ll want something better.

Yeah, I guess I should have been clear that’s part of what I was thinking (although to be honest I’m mostly a schmuck who pays for a few streaming services and uses that)

What exactly would be the main choking point? Horsepower of the Pi to take that stored file and stream it to the client?

So I believe the Pi 4 was the 1st to have an actual ethernet controller and not just having essentially a built in USB to ethernet adapter so bandwidth to your HDDs/ethernet shouldn’t be a problem.

Streaming directly off of the pi should be tolerable. A bit slower than a full fat computer with tons of ram for caching and CPU power to buffer things. But fine. There’s some quirks with usb connected HDDs that makes them a bit slower than they should (still in 2025 UASP isn’t a given somehow) But streaming ultimately doesn’t need that much bandwidth.

What’s going to be unbearable is transcoding. If you’re connecting some shitty ass smart TV that only understands like H264 and your videos are 265 then that has to get converted, and that SUCKS. Plex by default also likes to play videos at a lower bitrate sometimes, which means transcoding.

There’s also other weird quirks to look out for. Like someone else was (I think) doing exactly what you wanted to do, but no matter what the experience was unbearable. Apparently LVM was somehow too much compute for the pi to handle, and as soon as they switched to raw EXT4 they could stream perfectly fine. I don’t remember why this was a problem, but it’s just kind of a reminder of how weak these devices actually are compared to “full” computers.

I think you can install OpenMediaVault on that, at least I used to run it on a Pi 3 and an USB drive. Then just run whatever docker container you wish to.

IIRC raspberry pis aren’t great as big storage NAS due to limited io but like for a small amount of home storage more than adequate.

You can use it as a smb server and mount it with your other devices. They have an official addon for it with samba in the name.

I’ve got 2 rPis - a pi5 running Home Assistant and a pi4 with a USB drive caddy acting as little more than a NAS (it also does all the downloading through radarr etc… )

I find them perfectly adequate.

My gaming rig acts as my emby server as it’s basically on all the time and it has a beefy gfx card that can handle transcoding.