I’m not moving any goalposts. You’re the one arguing about the semantics around “Plasma”, and I keep saying that’s irrelevant.

Refer back to my original comment which was, and I quote:

So, are there any plans to reduce the bloat in KDE, maybe even make a lightweight version (like LXQt) that’s suitable for older PCs with limited resources?

To clarify, here I was:

- Referring to KDE + default apps that are part of a typical KDE installation

- Stating that a typical KDE installation is bloated compared to a typical lightweight DE like LXQt

- Saying with the intention that the “bloat” is RELATIVE, with respect to a older PC with limited resources

The ENTIRE point of my argument was the KDE isn’t really ideal RELATIVELY, for older PCs with limited resources, and I’m using LXQt here are a reference.

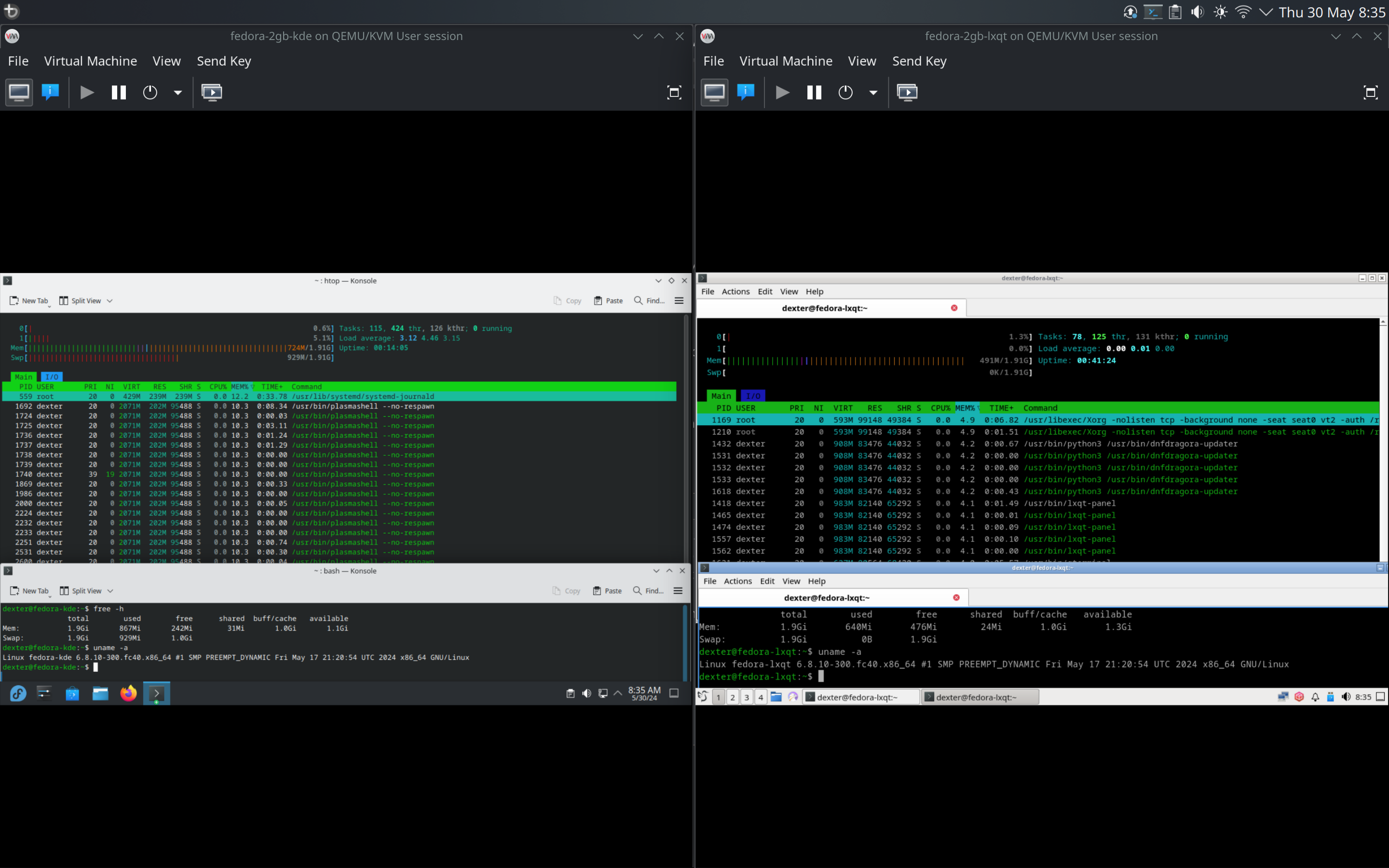

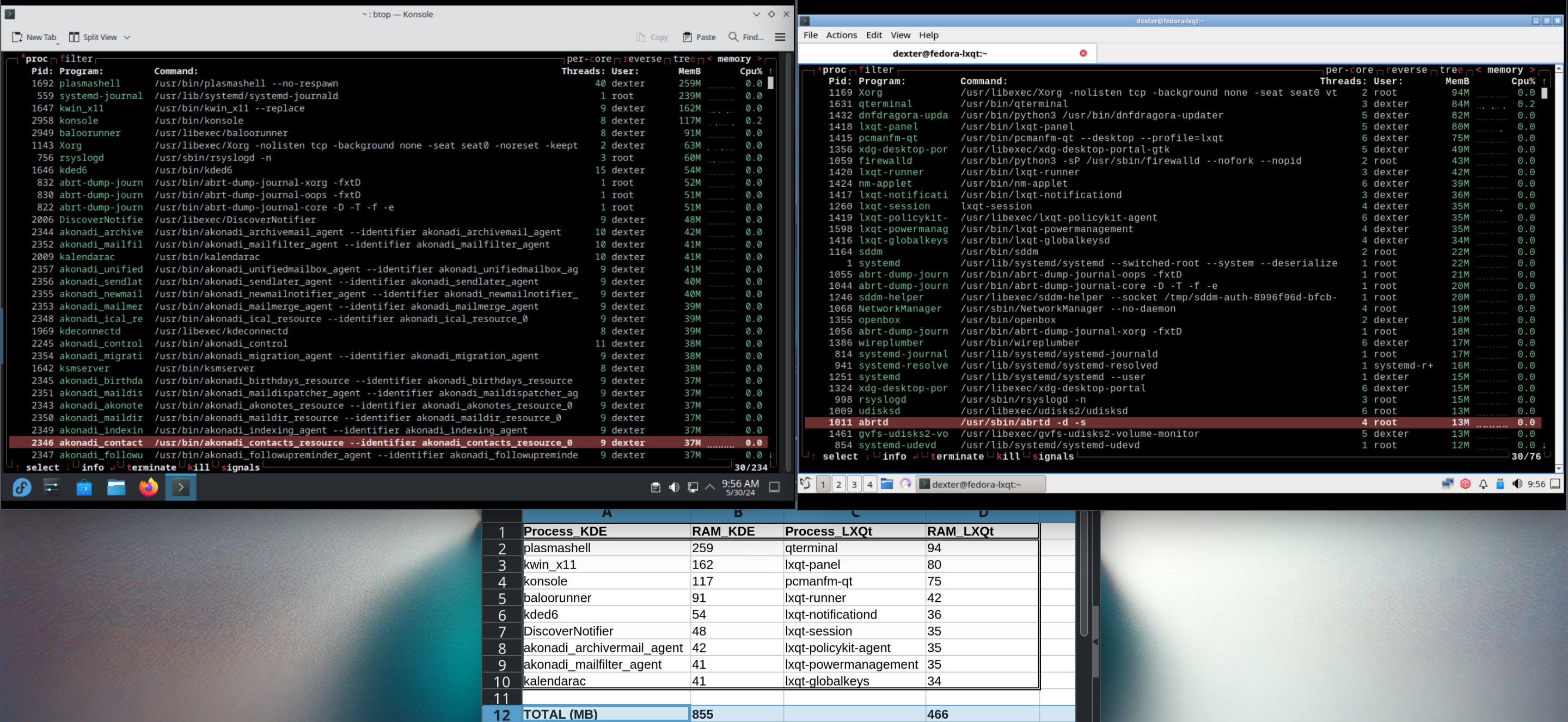

In a subsequent test, here’s a direct apples-to-apples(ish) component comparison:

| Component | Process_KDE | RAM_KDE | Process_LXQt | RAM_LXQt |

|---|---|---|---|---|

| WM | kwin_x11 | 99 | openbox | 18 |

| Terminal | konsole | 76 | qterminal | 75 |

| File Manager | Dolphin | 135 | pcmanfm-qt | 80 |

| File Archiver | ark | 122 | Lxqt-archiver | 73 |

| Text Editor | kwrite | 121 | featherpad | 73 |

| Image Viewer | gwenview | 129 | lximage-qt | 76 |

| Document Viewer | okular | 128 | qpdfview-qt6 | 51 |

| Total | 810 | 446 |

plasmashell was sitting at 250MB btw in this instance btw.

The numbers speak for themselves - no one in their right minds would consider KDE (or plasmashell, since you want to be pedantic) to be “light”, in RELATION to an older PC with limited resources - which btw, was the premise of my entire argument. Of course KDE or plasmashell might be considered “light” on a modern system, but not an old PC with 2GB RAM. Whether something is considered light or bloated is always relative, and in this instance, it’s obvious to anyone that KDE/plasmashell isn’t “light”.

Serenity for sure. I love the 90s aesthetic and would like to see it make a comeback. At the very least I’d like to see their Ladybird browser become mainstream - we really need more alternatives to the Chromium family.