So yes, you can visit your favorite blog, but its still not the same as it was in the 90s or early 00s.

It absolutely is. I might argue podcasts have kinda usurped the old blogging space (or, at least, supplanted it). But I’ve got an RSS feed full of blogs I follow that are barely different that what I was looking at 30 years ago. The 90s is alive on Feedly.

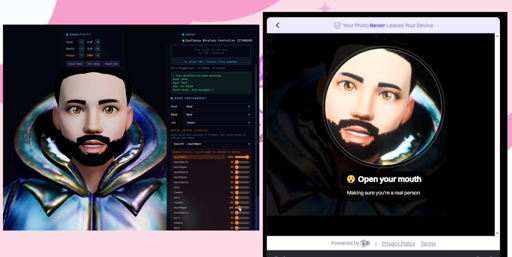

Fucken computers bullshit, its fucken sick

Lolz.

Who is going to remove it? Trump’s friend Tim Cook? Trump’s friend Jeff Bezos? Trump’s friend Sundar Pichai? Or Trump’s friend Satya Nadella?