Usually people say “premature optimization is the root of all evil” to say “small optimizations are not worth it”

No, that’s not what people mean. They mean measure first, then optimize. Small optimizations may or may not be worth it. You don’t know until you measure using real data.

They mean measure first, then optimize.

This is also bad advice. In fact I would bet money that nobody who says that actually always follows it.

Really there are two things that can happen:

-

You are trying to optimise performance. In this case you obviously measure using a profiler because that’s by far the easiest way to find places that are slow in a program. It’s not the only way though! This only really works for micro optimisations - you can’t profile your way to architectural improvements. Nicholas Nethercote’s posts about speeding up the Rust compiler are a great example of this.

-

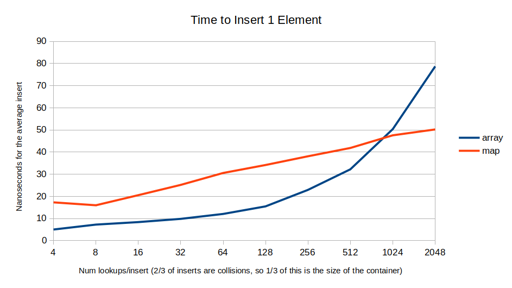

Writing new code. Almost nobody measures code while they’re writing it. At best you’ll have a CI benchmark (the Rust compiler has this). But while you’re actually writing the code it’s mostly find just to use your intuition. Preallocate vectors. Don’t write O(N^2) code. Use

HashSetetc. There are plenty of things that good programmers can be sure enough are the right way to do it that you don’t need to constantly second guess yourself.

-

Exactly. A 10% decrease in run time for a method is a small optimization most of the time, but whether or not it’s premature depends on whether the optimization has other consequences. Maybe you lose functionality in some edge cases, or maybe it’s actually 10x slower in some edge case. Maybe what you thought was a bit faster, is actually slower in most cases. That’s why you measure when you’re optimizing.

Maybe you took 3 hours of profiling and made a loop 10% faster but you could have trivially rewritten it to run log n times instead of n times…

No, that’s what good programmers say (measure first, then optimize). Bad programmers use it to mean it’s perfectly fine to prematurely pessimize code because they can’t be bothered to spend 10 minutes to come up with something that isn’t O(n^2) because their set is only 5 elements long so it’s “not a big deal” and “it’s fine” even though the set will be hundreds or thousands of elements long under real load.

It would be almost funny if it didn’t happen every single time.

A nice post, and certainly worth a read. One thing I want to add is that some programmers - good and experienced programmers - often put too much stock in the output of profiling tools. These tools can give a lot of details, but lack a bird’s eye view.

As an example, I’ve seen programmers attempt to optimise memory allocations again and again (custom allocators etc.), or optimise a hashing function, when a broader view of the program showed that many of those allocations or hashes could be avoided entirely.

In the context of the blog: do you really need a multi set, or would a simpler collection do? Why are you even keeping the data in that set - would a different algorithm work without it?

When you see that some internal loop is taking a lot of your program’s time, first ask yourself: why is this loop running so many times? Only after that should you start to think about how to make a single loop faster.

You don’t even need to go at a low level. Lots of programmers forget that their applications are not running in a piece of paper in general.

My team at work once had an app running Kubernetes and it had a memory leak, so its pod would get terminated every few hours. Since there were multiple pods, this had effectively no effect on the clients.

The app in question was otherwise “done”, there were no new features needed, and we hadn’t seen another bug in years.

When we transferred the ownership of the app to another team, they insisted on finding and fixing the memory leak. They spent almost one month to find the leak and refactor the app. The practical effect was none - in fact due to the normal pod scheduling they didn’t even buy that much lifetime to each individual pod.

I get your point but I do not think you should justify releasing crap code because you think it has minimal impack on the customer. A memory leak is a bug and just should not be there.

deleted by creator

… and this is how IT ends up being responsible for a breach.

It is a bug, unexpected and undefined behavior - it shouldnt have been there.

You are not wrong about the outcome from a capitalist view, but otherwise you are.

In project management lore there is the tripple constraint: time, money, freatures. But there is another insidious dimension not talked about. That is risk.

The natural progession in a business if there is no push back is that management wants every feature under the sun, now, and for no money. So the project team does the only thing it can do, increase risk.

The memory leak thing is an example of risk. It is also an example of some combination of poor project management including insufficient push back against management insanity and bad business mangement in general which might be an even bigger problem.

My point, this is a common natural path of things but it does not have to always be tolerated.

Exactly.

If you are exiting with a memory leak, Linux is having to wipe the floor for you.

Fun read, I’ve never heard the full quote before.